Beyond Deployment: The Critical Tools for Kubernetes Day 2 Operations

Written byJustin Yue

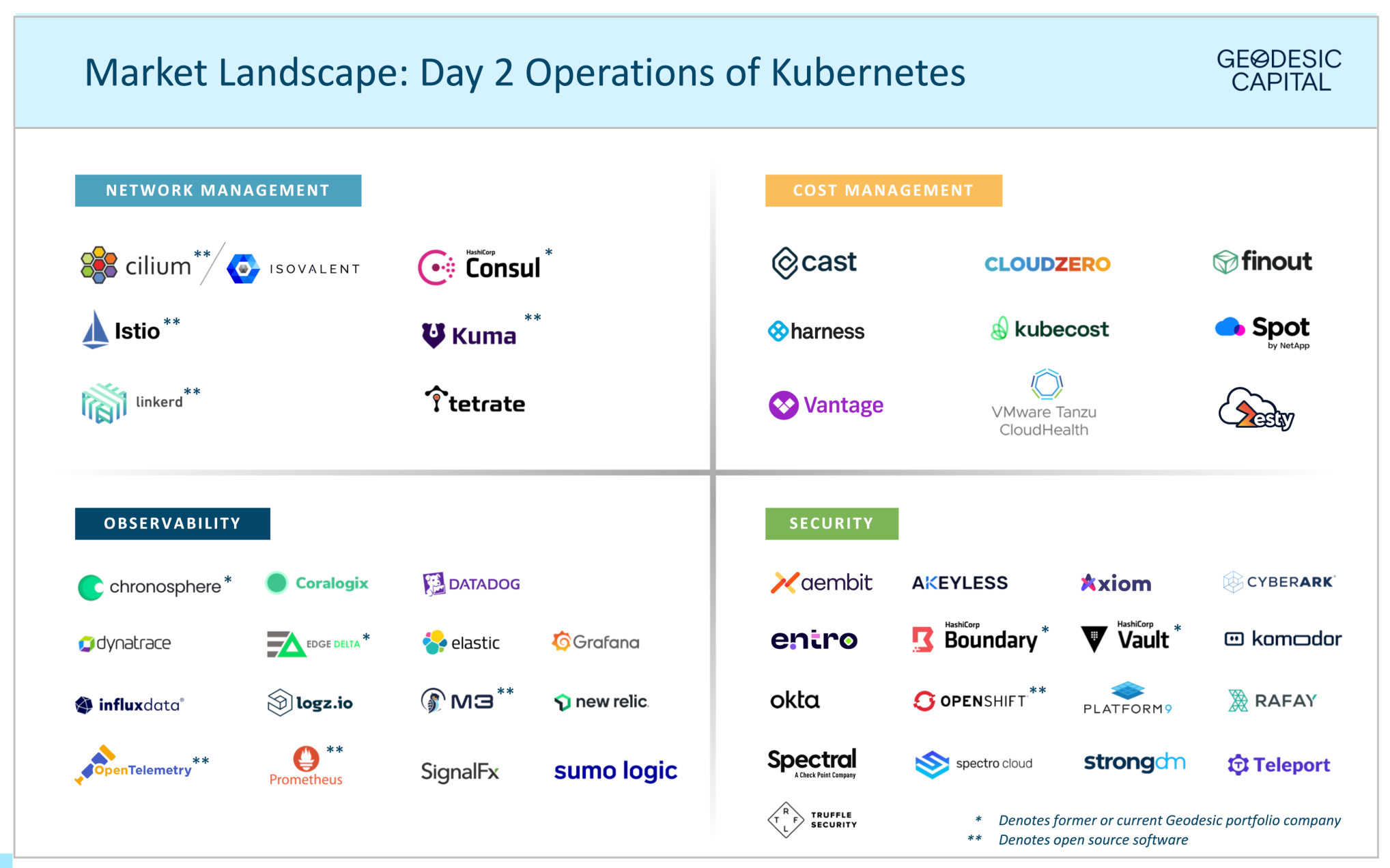

The modern-day enterprise’s infrastructure engineering needs continue to evolve. With the adoption of cloud computing and the increased use of microservices, software is being deployed and scaled in containerized environments. As a result, Kubernetes has emerged as the primary technology to manage these code environments. According to the Cloud Native Computing Foundation (CNCF), 71% of Fortune 100 companies use Kubernetes as their primary container orchestration tool. Given the rise in the adoption of containers, I wanted to investigate the tooling needed to manage Kubernetes within an enterprise. The Geodesic team and I spoke with several cloud-native businesses that are end users of such solutions and gathered insights from Geodesic portfolio companies and other startups that offer tooling within this space. Based on our findings, I present a vendor landscape (see below) needed to manage the Day 2 Operations of Kubernetes and considerations for startups offering a solution in this space. If you are a founder or executive of a business selling to cloud-native companies, this will help provide you with an overview of the broader landscape and how your solution fits in.

As a refresher, Day 2 operations refers to the work that comes after deploying application code in a containerized environment. That is, what tools are needed to monitor, secure and optimize performance of Kubernetes clusters.

I have outlined the different tooling that is supporting this phase of Kubernetes adoption, the use case of each technology, and the companies and open source projects that are providing an offering. Day 2 operations of Kubernetes can be broken into four categories: network management via service mesh, observability, security, and cost optimization. Let’s dive in.

Network Management via Service Mesh:

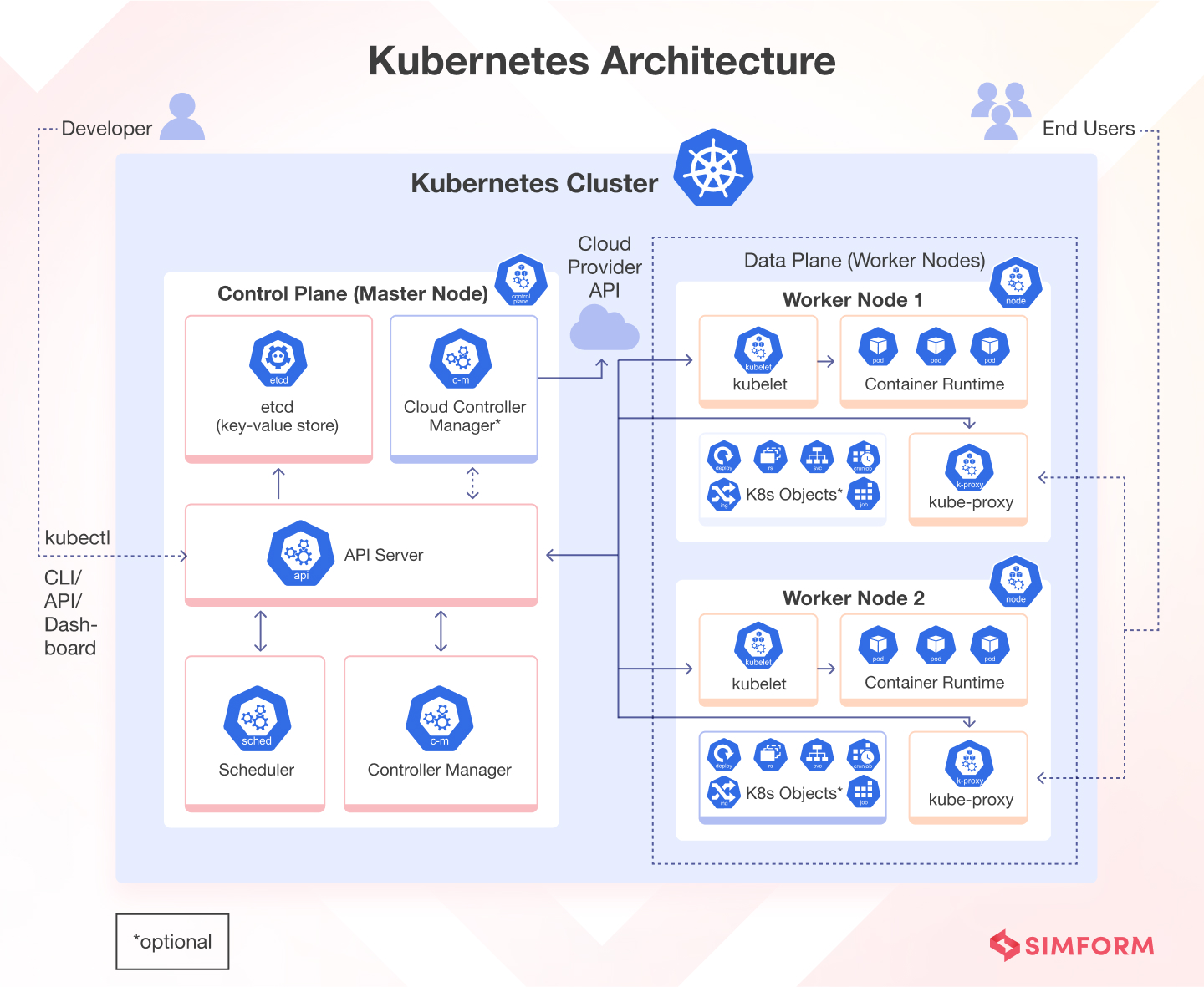

The network within a Kubernetes cluster connects the master node with worker nodes, allowing for work to be distributed among these different components.

Organizations leverage a service mesh to allow for different components within a Kubernetes environment to securely communicate with one another in an encrypted way. One type of communication that happens within a Kubernetes environment is the communication between two pods. A pod is the underlying unit in a Kubernetes cluster that provides resources and directions on how to run containers. Without a service mesh, communication between pods would be unencrypted, making it difficult to isolate the effects of a pod being compromised.

Example use case: The communication between pods within a Kubernetes node allows for the functioning of a distributed microservice architecture. For example, without this communication established, the front end of an application would not be able to talk to the backend application to execute a request.

Solutions:

Providers in the space include Cilium**/Isolvanet (acquired by Cisco), HashiCorp Consul*, Istio**, Kuma**, Linkerd**, and Tetrate.

These vendors differ on their deployment methodology, which has implications on compute consumption and associated costs. HashiCorp Consul, Istio, Linkerd and Tetrate are deployed via sidecar proxies that handle application traffic at runtime. Cilium is deployed via eBPF technology, which allows it to understand what kind of traffic goes through the network.

Observability

Observability solutions collect, store and analyze metrics, logs and traces to provide an ongoing assessment of a software system’s overall health. More specifically, by looking at metrics, logs and traces, teams are able to gain a fulsome understanding of the health of their applications and diagnose potential issues that may impact app performance. For example, observability tools shed light on why applications may have slower responses (e.g. there are more complex transactions that the apps have to handle or there is an issue with a specific microservice). Overall, observability tools provide context on application and infrastructure performance and are critical in helping identify the root cause of issues that negatively impact performance.

Example use case: Companies have Service-Level Agreements (SLAs) with their customers, which define the expectations for hardware and software functioning. If a vendor’s application crashes and is not fixed, then this may cause an SLA agreement to be breached, leading to potential financial penalties and legal implications. Furthermore, if a software application breaks, this could leave data exposed, which becomes a security issue.

Solutions for Infrastructure Observability:

- For Tracing: Chronosphere*, Coralogix, Datadog, Elasticsearch, Grafana Labs, Influxdata, New Relic, OpenTelemetry**, SignalFX (acquired by Splunk)

- For Logs: Chronosphere*, Coralogix, Datadog, Dynatrace, Edge Delta*, Elasticsearch, Grafana Labs, Influxdata, Logz.io, New Relic, OpenTelemetry**, SignalFX (acquired by Splunk), Sumo Logic

- For Metrics: Chronosphere*, Coralogix, Datadog, Dynatrace, Edge Delta*, Elasticsearch, Grafana Labs, Influxdata, M3**, New Relic, OpenTelemetry**, Prometheus**, SignalFX (acquired by Splunk)

Security

Security within the Day 2 operations of Kubernetes involves maintaining a secure Kubernetes environment that is resilient to threats. As a best practice, organizations deploying software into a Kubernetes environment should embrace least privilege access, that is granting each stakeholder the least amount of access to corporate assets. Two tools that can be leveraged for managing identity and access management are role-based access control (RBAC) and Secrets. RBAC involves ensuring that only designated individuals have access to Kubernetes clusters and can make changes to these clusters. Teams may also choose to leverage Secrets to protect sensitive information such as API keys, passwords and tokens.

Example use case: Organizations leverage security tools to prevent assets in their software environment from being exposed to security vulnerabilities. If hackers are able to compromise a Kubernetes cluster, they could gain unauthorized access to applications running on the Kubernetes cluster and IP, business information or user data. Overall, this could lead to reputational damage and IP/cost implications for an organization.

Solutions:

- RBAC: Aembit, Axiom Security, HashiCorp Boundary*, Komodor, Okta, OpenShift by Red Hat**, Platform9, Rafay, Spectro Cloud, Strongdm, Teleport, Truffle Security

- Secrets Management: Akeyless, CyberArk, Entro Security, HashiCorp Vault*, OpenShift by Red Hat**, Platform9, Spectral (acquired by Check Point), Truffle Security

Cost Optimization

Organizations incur compute costs when they deploy and scale containerized applications. Teams may over-provision compute resources such as CPUs, meaning that the services are provided with more resources than what they actually need, which leads to resource underutilization. Cost optimization companies track and optimize spend across Kubernetes components and automate resource allocation.

Example use case: Organizations may choose to hire FinOps engineers to manually provision more or fewer compute resources. Alternatively, organizations can leverage cost optimization tools to automatically scale up and down these resources, thereby ensuring only the appropriate amount of resources are provisioned. Overall, this helps organizations optimize spend.

Solutions:

- Kubernetes Cost management: CAST AI, Cloudzero, Finout, Harness, Kubecost, Spot (acquired by NetApp), Vantage, Zesty

- Cloud Cost Optimization: CAST AI, Cloudzero, Finout, Harness, Spot (acquired by NetApp), Vantage, VMWare Tanzu Cloudhealth, Zesty

Conclusion

The Day 2 operations of Kubernetes are a critical requisite for organizations looking to successfully adopt Kubernetes. This is because organizations need to run workloads in an enterprise-approved manner (e.g. ensuring there is ongoing networking, observability, security and cost monitoring in place).

If your company is building solutions for Day 2 operations, it’s important to take a step back from your specific vertical to look at the entire landscape, so you can build a better understanding of how your product fits into the larger mix of complexity your customers are navigating. Understanding this landscape will help you appreciate the areas of focus for your cloud-native customers and the tools they are using to address these use cases.

This is a space I am continuing to monitor and track developments in. If you are at a startup building a solution for the cloud-native landscape, I would love to hear from you.

* Denotes former or current Geodesic portfolio company

** Denotes open source software